7 things to avoid when doing Airflow DAG pipelines

What to avoid when building Airflow pipelines to keep code sane, stable and avoid pitfalls.

Apache Airflow is powerful tool used by a lot of organizations to run scheduled batch processing pipelines. As any software Airflow follows a bunch of assumptions and design decisions that make it a fit for some jobs and not for the other jobs. It is hard to change in some parts and very flexible in other parts. I'll show how we at Shareablee follow a few rules to make our pipelines more reliable and stable.

Some of the Airflow design choices allow you to cut yourself but give you a bunch of powerful concepts. Those are few rules that we discovered to be good enough to follow in majority of our pipelines. Before deploying any new code we validate/review changes with such a list to make sure we do not open new problem generators when we really do not need to.

Airflow/code/infrastructure context that we base on:

- Airflow inside Kubernetes (Airflow deploys are code-immutable images)

- Airflow version is 1.10.*

- all Airflow code, DAGs, deployments reside under version control

- building and deployment are relatively fast

- there is local environment (docker-compose integrated with kubernetes to run tasks) for developer to test pipeline quite easily

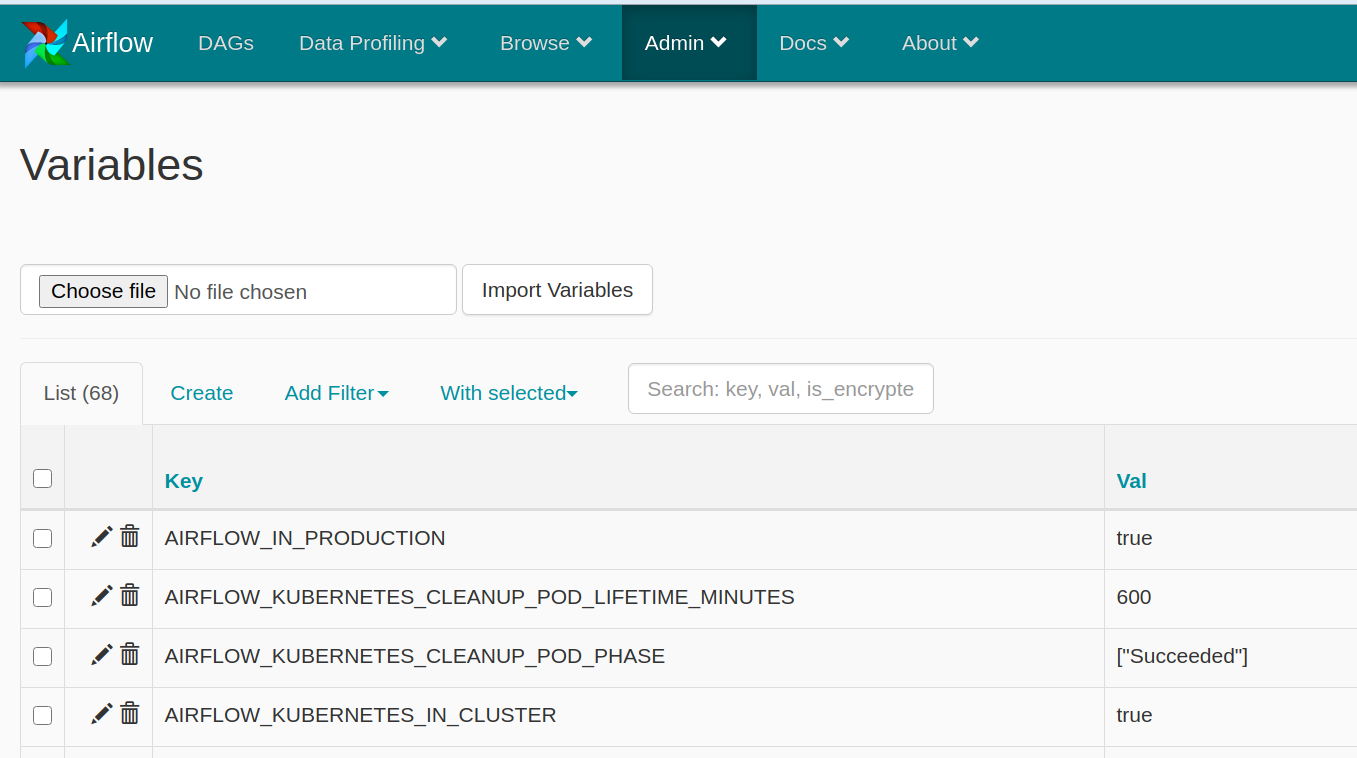

1. Avoid Airflow Variables For Configuration

Infrastructure as a code is great concept. It gives you trackability and

auditability. Airflow variables do not. How much time do you save when you change

this Variable inside Airflow once a month instead of pushing change to code

repository and triggering deployment? Is this elasticity and saved time worth

the trackability and debugability in case of failure?

No it is not.

Variables are quite reasonable as secret storage to avoid secrets in the

code, but even that is also partially true as secrets in Airflow can leak into

logs or being rendered in DAG panel, templates section.

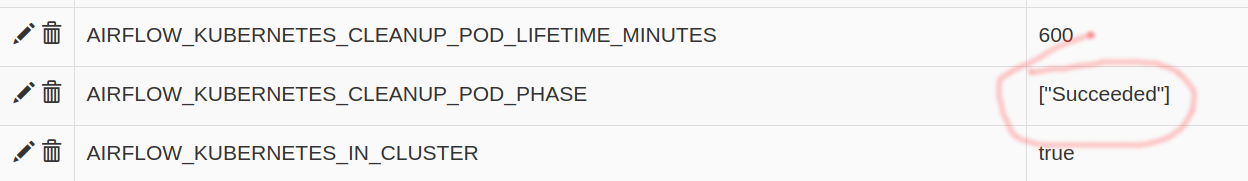

2. Avoid Complex Airflow Variables

Airflow Variables are very powerful in terms of value structure. You can put anything into it and serialize yourself or use Airflow builtin JSON support. Huge JSON blob as configuration parameter sounds like a great option unless we realize that

- in UI, whole JSON is edited in single

InputHTML field (any changes to that value, if it's more than few characters, is just pure pain - there is no validation on JSON structure (no feedback on error)

- there is no validation on JSON value (no feedback on error, no callback to inject validation yourself)

- when somebody damage the value, the changes are not tracked. Debugging that, especially if variable is used by few highly used DAGs, will be very stressful event

Keep configuration inside the code, especially complex one.

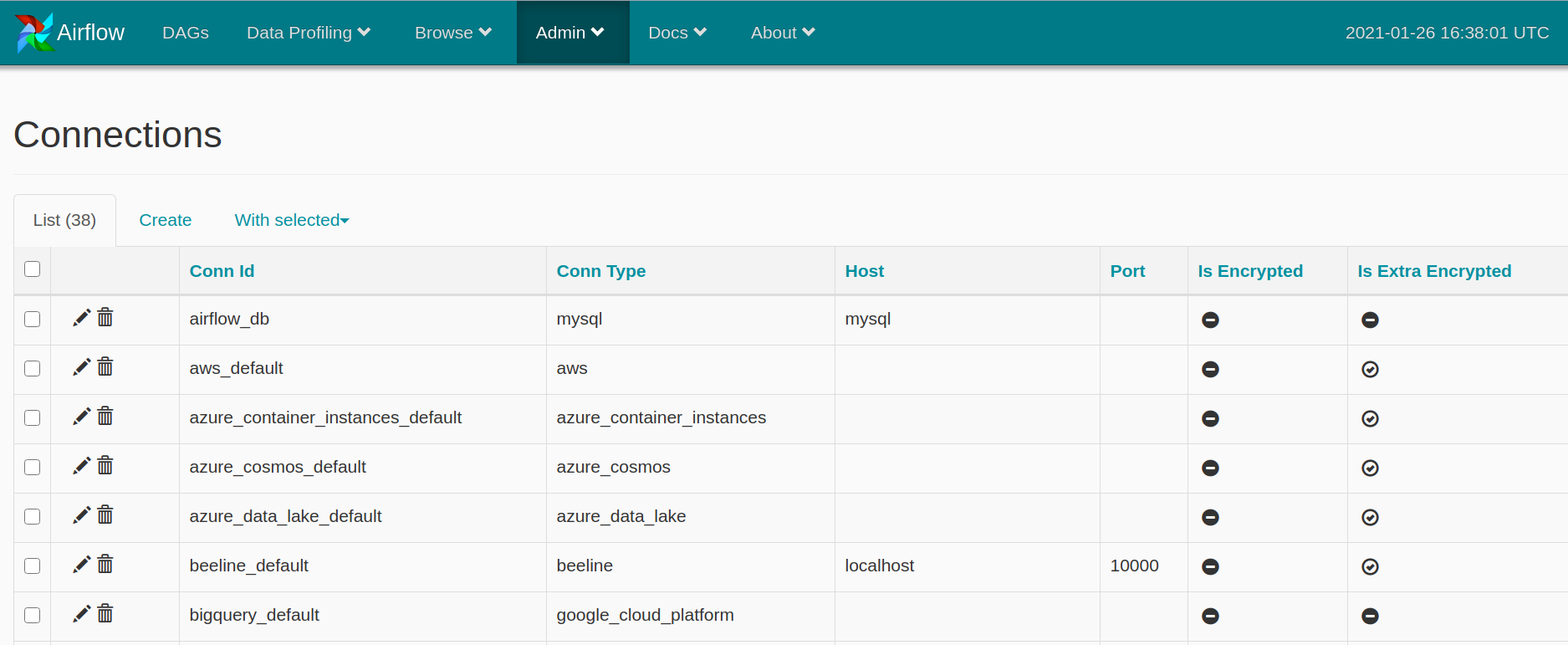

3. Avoid Airflow Connections

Simiarly to variables, Airflow gives you connections. It looks like connections are the way to share common credentials to other services and provide them as python connections in PythonOperator.

In our case, we mostly use predefined docker images and we pass credentials to images (from variables or from Kubernetes secrets) and connections are not used at all.

4. Avoid sharing Airflow Variables and Secrets between DAGs

Even if you are going to use PythonOperator a lot and share same connection

over and over, the connection requires access that is sum of all permissions of

all tasks that you are going to run. If you always give full permission set to

the jobs, you might be ok with sharing connections. But if you would like to

have granular permissions and only grant minimal permissions required for the

job you probably want to separate those per DAG at least.

5. Avoid dynamic DAGs manipulation (aka runtime branching)

Airflow gives very powerful way to create DAGs. You can use all python power to create DAG dynamically. With great power comes great responsibility. It is easy to make DAG generation very complex, hard to read and worse, error prone. Sometimes it's hard to avoid dynamic DAG generation, especially when you want to produce quite a few DAGs from the same template, but try to keep it as simple as possible.

I advise to avoid any dynamic code that produces different DAG structures

(sure, you need some parametrization). Having multiple different dynamic

branches (aka ifs in DAG generation code) make it, from my point of view,

more error prone and much harder

to debug than just simple jobs.

If you need dynamic generation of different similar structures, just create two different pieces of code for that. Seems redundant, but when you will be debugging broken DAG at night in panic, you will thank yourself.

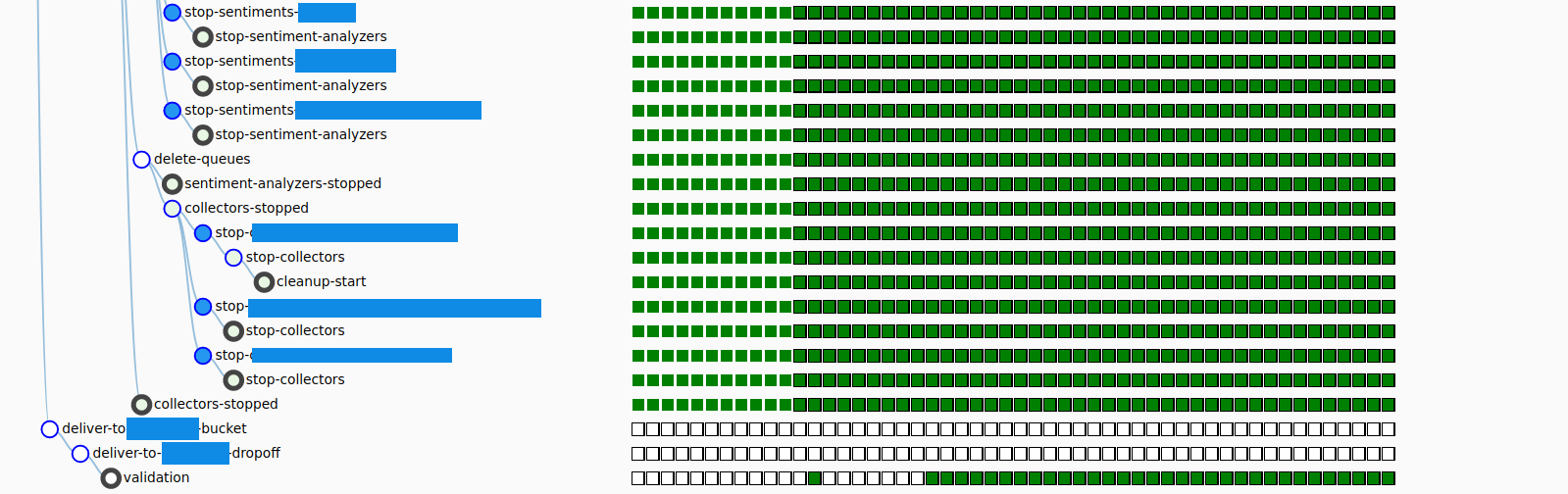

6. Avoid changing DAGs over time (tricky!)

Processes change over as time passes and DAGs must be adjusted. One of issues that I personally see in Airflow operations is lack of DAG changes history. There is no concept of DAG versions in Airflow and it only shows the latest version of DAG, even for past executions! Be aware of that, that can be very confusing when debugging historical DAG runs.

Let's consider two cases. When adding new task, Airflow will show new task as "not executed" for all past DAG runs. If one doesn't know you just changed the DAG, it could be considered that there was some issue in the past and jobs didn't run and we need to rerun them now. On the other hand if you remove the job, you lost history of task executions (technically entries are still in database, but not accessible through UI).

How to approach this?

- Try to avoid changes

- Version DAGs (add version number to DAG file name)

- Make sure people operating Airflow know this fact so they don't run past jobs

7. Avoid deploying without testing locally

That's very very general rule as to any software releases. Make sure you test your DAGs. DAGs are just python code and should be treated like that. Reject all claims that „this is ops” stuff. Whoever creates DAG and what to deploy that, should be responsible for testing and making sure all parts are working, not breaking other people DAGs and other work. Sounds obvious but so many times "just a typo" change break things. I'm guilty of that as well.

KISS for goodbye

Avoid moving parts, keep it sane and simple.